[ad_1]

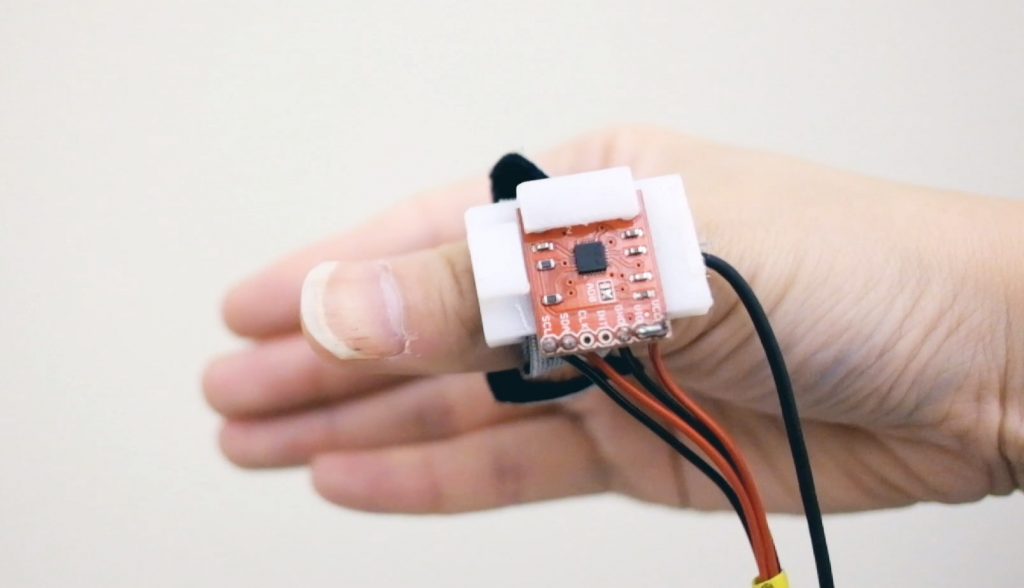

It’s from Georgia Tech where researchers have developed a computerised thumb-ring that can be used to communicate with a computer screen.

A gyroscope and a tiny microphone on the device allow it to track – and then interpret – small gestures made by moving the thumb in relation to adjacent fingers.

Using a system dubbed ‘Fingersound’ the device recognises the beginning and end of a gesture by using the microphone and gyroscope to detect the signal created when the thumb and fingers move against one another.

The technology identifies the beginning and end of each gesture, while provides tactile feedback. The thumb is moved to describe the shapes of letters and numbers on the fingers. These then appear in written form on the computer or smartphone screen.

When a person grabs their phone during a meeting, even if trying to silence it, the gesture can infringe on the conversation or be distracting,” said Thad Starner, the Georgia Tech School of Interactive Computing professor leading the project. “But if they can simply send the call to voicemail, perhaps by writing an ‘x’ on their hand below the table, there isn’t an interruption.”

Because the device can be operated without looking at it, the research team believes that it could have an application in virtual reality (VR) technology, as users would be able to respond to communications without needing to remove a VR head display unit.

Our system uses sound and movement to identify intended gestures, which improves the accuracy compared to a system just looking for movements,” said Zhang. “For instance, to a gyroscope, random finger movements during walking may look very similar to the thumb gestures. But based on our investigation, the sounds caused by these daily activities are quite different from each other.”

Fingersound sends the sound captured by the contact microphone and motion data captured by the gyroscope sensor through multiple filtering mechanisms. The system then analyses it to determine whether a gesture was performed or whether it was simply noise from other finger-related activity.

Thanks to New Atlas for bringing this research, which was presented at Ubicomp and the ACM International Symposium on Wearable Computing earlier this year, to our attention.

[ad_2]

Source link