[ad_1]

NMAX is described as a modular, scalable architecture that requires a fraction of the DRAM bandwidth of existing neural inferencing solutions.

Put is a general purpose Neural Inferencing Engine which can run any type of NN from simple fully connected DNN to RNN to CNN and can run multiple NNs at a time. NMAX is programmed using Tensorflow and in the future will support other model description languages as well.

“The difficult challenge in neural network inferencing is minimizing data movement and energy consumption, which is something our interconnect technology can do amazingly well,” sayscFlexLogix CEO Geoff Tate, “while performance is key in inferencing, what sets NMAX apart is how it handles this data movement while using a fraction of the DRAM bandwidth that other inferencing solutions require. This dramatically cuts the power and cost of these solutions, which is something customers in edge applications require for volume deployment.”

In neural inferencing, the computation is primarily trillions of operations (multiplies and accumulates, typically using 8-bit integer inputs and weights, and sometimes 16-bit integer).

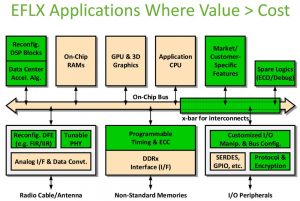

The technology Flex Logix has developed for eFPGA is also ideally suited for inferencing because eFPGA allows for re-configurable, fast control logic for each network stage.

SRAM in eFPGA is reconfigurable as needed in neural networks where each layer can require different data sizes; and Flex Logix interconnects allow reconfigurable connections between SRAM input banks, MAC clusters, and activation to SRAM output banks at each stage.

The result is an NMAX tile of 512 MACs with local SRAM, which in 16nm has ~1 TOPS peak performance.

NMAX tiles can be arrayed, without any GDS change, in configurations of whatever TOPS is required, with varying amounts of SRAM as needed to optimize for the target neural network model, up to to >100 TOPS peak performance.

For example, for YOLOv3 real time object recognition, NMAX arrays can be generated in increasing size to process 1, 2 or 4 cameras with 2 MegaPixel inputs at 30 frames per second with batch size = 1.

This is done with just ~10GB/sec of DRAM bandwidth, compared to the 100s of GB/second of existing solutions. In this example, MAC utilization is in the 60-80% range, which is much better than existing solutions.

Another example is ResNet-50 for image classification.

The three NMAX arrays mentioned above classify 4600, 9500 and 19,000 images/second respectively, all with batch size = 1.

All of these throughputs are achieved with 1 DRAM and about 90% MAC utilization.

As a comparison Nvidia Tesla T4 needs a batch size of 28 to achieve 3920 images/second, achieving <25% MAC utilization while using 8 DRAMs.

Lower batch sizes are very important for all edge applications and many data center applications in order to minimize latency – long latency means slower response time.

High MAC utilization means less silicon area/cost. Low DRAM bandwidth means fewer DRAMs, less system cost and less power.

[ad_2]

Source link