[ad_1]

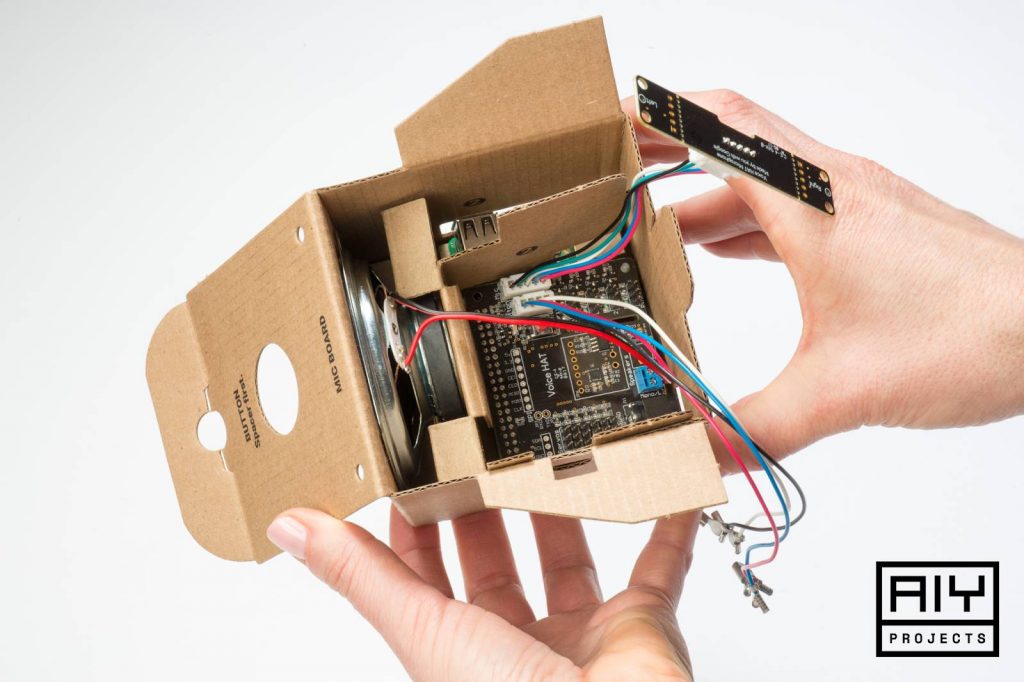

AIY Voice recognition kit

Google recently launched its a do-it-yourself voice recognition kit for Raspberry Pi, but the company says its initial offering sold out globally in “just a few hours”. So it’s now announcing more of the AIY Voice Kits will be available in stores and online.

The Voice Kit includes a VoiceHAT (Hardware Accessory on Top), mic board, speaker, components, connectors and a cardboard shaping. It was first made available with MagPi #57 back in May.

Billy Rutledge, Google’s director of AIY projects, writes:

The Google Assistant SDK is configured by default to bring hotword detection, voice control, natural language understanding, Google’s smarts and more to your Voice Kit. You can extend the project further with local vocabularies using TensorFlow, Google’s open source machine learning framework for custom voice user interfaces.

Our goal with AIY Projects has always been to make artificial intelligence open and accessible for makers of all ages. Makers often strive to solve real world problems in creative ways, and we’re already seeing makers do some cool things with their Voice Kits.

What can makers do with the voice recognition kit? One example cited by Google is a retro-inspired intercom, described as the “1986 Google Pi Intercom”. The wall-mounted Raspberry Pi 3 runs the AIY Voice kit to supply a Google voice assistant. See below.

The maker used a mid-80s intercom that he bought on sale for £4, apparently.

ARCore

Also from Google recently, is the preview of a new software development kit (SDK) it’s calling ARCore. It supports augmented reality capabilities, says Google for existing and future Android phones.

Dave Burke, Google’s VP of Android Engineering, writes:

We’ve been developing the fundamental technologies that power mobile AR over the last three years with Tango, and ARCore is built on that work. But, it works without any additional hardware, which means it can scale across the Android ecosystem. ARCore will run on millions of devices, starting today with the Pixel and Samsung’s S8, running 7.0 Nougat and above.

We’re targeting 100 million devices at the end of the preview. We’re working with manufacturers like Samsung, Huawei, LG, ASUS and others to make this possible with a consistent bar for quality and high performance.

Three things are highlighted, in Google’s words:

Motion tracking: “Using the phone’s camera to observe feature points in the room and IMU sensor data, ARCore determines both the position and orientation (pose) of the phone as it moves. Virtual objects remain accurately placed.”

Environmental understanding: “It’s common for AR objects to be placed on a floor or a table. ARCore can detect horizontal surfaces using the same feature points it uses for motion tracking.”

Light estimation: “ARCore observes the ambient light in the environment and makes it possible for developers to light virtual objects in ways that match their surroundings, making their appearance even more realistic.”

ARKit

Note that Apple also says it will make an AR tool-set called ARKit available to developers imminently, when it launches iOS 11.

Google is also working on a Visual Positioning Service (VPS), it highlighted at its Google I/O event, and it also releasing prototype browsers for web developers so they can start experimenting with AR, too.

These custom browsers should allow the creation of AR-enhanced websites that can run on both Android/ARCore and the iOS/ARKit.

Read more information at Google.

[ad_2]

Source link